Magic Prompt? Verbalized Sampling Prompting Strategy to Combat AI ‘Mode Collapse’

Tool for inventor brainstorming and legal arguments

A recent research paper from a team at Northeastern University, Stanford University, and West Virginia University tackles one of the most persistent frustrations with modern Large Language Models (LLMs): their tendency toward repetition. While powerful, aligned models like those behind ChatGPT can often get stuck in a rut, providing the same “typical” answer to a creative prompt. This paper, “Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity,” offers not only a compelling diagnosis for why this happens but also an elegant, training-free solution. The authors’ website calls it a “magic prompt.”

The core problem is called “mode collapse”—the reduction in output diversity that happens after a model is aligned with human preferences (Research, p. 1). The paper presents a novel argument that this collapse is not just an algorithmic quirk but a fundamental result of human bias baked into the training data. As a solution, it proposes a simple prompting strategy called Verbalized Sampling (VS).

The paper’s thesis is summarized effectively in its abstract:

“Unlike prior work that attributes this effect to algorithmic limitations, we identify a fundamental, pervasive data-level driver: typicality bias in preference data, whereby annotators systematically favor familiar text as a result of well-established findings in cognitive psychology... Motivated by this analysis, we introduce Verbalized Sampling (VS), a simple, training-free prompting strategy to circumvent mode collapse.” (p. 1)

The Problem: Typicality Bias as the Culprit

The researchers’ central argument is that the process used to make LLMs helpful and safe—alignment training based on human feedback—is the very source of the diversity problem. The issue lies with “typicality bias,” a documented human cognitive tendency.

The paper provides a clear articulation of this guiding principle:

“Cognitive psychology shows that people prefer text that is familiar, fluent, and predictable. This preference is rooted in various principles. For instance, the mere-exposure effect... and availability heuristic... imply that frequent or easily recalled content feels more likely and is liked more. Processing fluency... suggests that easy-to-process content is automatically perceived as more truthful and higher quality.” (p. 4)

During alignment, human annotators are asked to rate different AI-generated responses. Due to typicality bias, they “systematically favor conventional text” (p. 4). This preference for the familiar and predictable is then learned and amplified by the model.

The paper formalizes how this leads directly to mode collapse. When a model faces a prompt with many “correct” or “good” creative answers, this learned bias for typicality acts as a powerful tie-breaker:

“This shows that the probability mass is compressed toward typical completions... yielding a form of mode collapse... when many answers are tied on true task utility (a common scenario in creative writing, social simulation, etc), typicality bias acts as a tiebreaker that sharpens the output of the aligned model into the mode of the base model.” (p. 5)

This is a critical insight for AI developers and inventors. It suggests that simply gathering more human preference data might not solve—and could even worsen—the problem of AI conformity.

Proposed Solution: Re-framing the Prompt

The paper’s key contribution is Verbalized Sampling (VS), a method that mitigates this bias at inference time (i.e., when the user is making a request) without any need for model retraining.

Verbalized Sampling (VS)

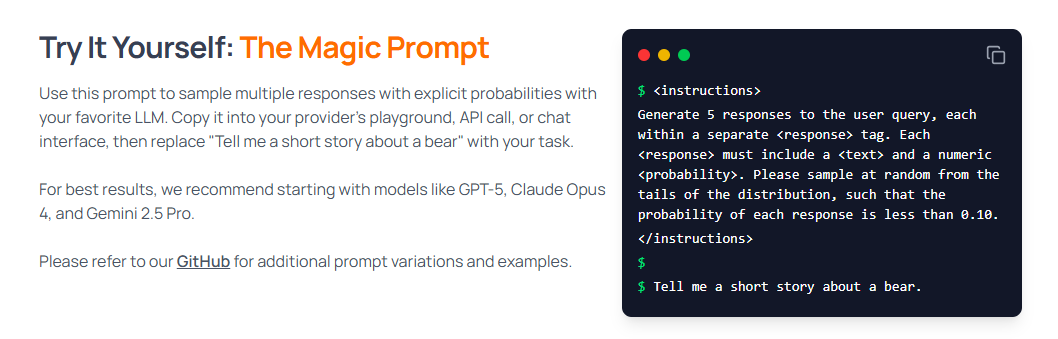

The strategy is a specific type of prompt engineering. Instead of asking for a single answer, the user asks the model to generate a set of answers and their corresponding probabilities.

“VS prompts the model to verbalize a probability distribution over a set of responses (e.g., ‘Generate 5 jokes about coffee and their corresponding probabilities’).” (p. 1)

This seemingly simple change has a profound effect. The authors theorize that a direct prompt (”Tell me a joke”) causes the model to collapse to the single most typical response (p. 2). In contrast, the VS prompt (”Tell me 5 jokes and their probabilities”) causes the model to collapse to the most typical distribution of responses, which, by definition, is diverse and “recover[s] the diversity of the underlying base model” (p. 2).

VS-CoT and VS-Multi Variants

The paper also explores more advanced variants of the prompt, such as “VS-CoT” (which adds “Think step-by-step” reasoning) and “VS-Multi” (which asks for more responses in a multi-turn conversation) (p. 6).

These more complex prompts can help the model manage the “cognitive burden” of the task. The authors note that for the most capable, large-scale models, these intricate variants “overcome this burden, even improving quality” (p. 7).

Diversity Tuning

A key benefit of this method is that it makes diversity a tunable parameter within the prompt itself.

“Unlike baseline methods, VS allows us to tune the output diversity by adjusting the probability threshold directly in the prompt (e.g.. ‘Generate five responses with probabilities below {threshold}’), without altering decoding parameters.” (p. 8)

This provides a practical, inference-time lever for developers. If they want more novel or “long-tail” ideas, they can simply ask the model to sample from the less probable parts of its learned distribution.

Examples: From Repetitive Jokes to Creative Stories

The paper validates this strategy across a wide range of tasks, including creative writing (poems, stories, jokes), dialogue simulation, open-ended Q&A, and synthetic data generation (p. 1).

The creative writing examples are particularly illustrative. The authors show that when given a direct prompt like “Tell me a joke about coffee,” an aligned model repeatedly produces the same “one specific joke” (Figure 1, p. 1):

“Why did the coffee file a police report? Because it got mugged! ☕”

When the same model is given a VS prompt, it instantly produces a range of different jokes, such as “Espresso may not solve all your problems, but it’s a good shot. (Prob: 0.12)” and “Why did the latte go to therapy? It had too much foam to deal with. (Prob: 0.15)” (p. 1).

A more dramatic example is found in story generation. The model was given the starting prompt, “Without a goodbye,”.

The authors found that with direct prompting, the model “consistently generates stories about a romantic partner’s disappearance” (p. 8), such as “Without a goodbye, Elara vanished. Her side of the bed was cold...” (p. 8).

In sharp contrast, Verbalized Sampling produced wildly different and creative concepts for the same prompt:

“Without a goodbye, the email landed in my inbox. It was from Mark, my best friend since kindergarten... The subject line was simply: ‘Moving On.’” (p. 8)

“Without a goodbye, the last star flickered out. It wasn’t a violent explosion, no supernova spectacle, just a gentle fade... I was the last observer, adrift in my tiny, self-sustaining craft, my instruments registering only an infinite, inky blackness.” (p. 8)

“Without a goodbye, the music simply stopped. Not a fade-out, not a final crashing cymbal, but an abrupt, jarring silence that left the dancers frozen mid-step. The DJ... had simply collapsed behind his turntables.” (p. 8)

This demonstrates a clear ability to break out of a single conceptual “mode.” For an inventor using an LLM for brainstorming or a patent attorney searching for conceptual prior art, this difference is critical. The research also confirms that these diversity gains do not come at the cost of factual accuracy or safety (p. 2).

This isn’t a “gimmick” or “trick,” as one AI blogger calls Verbalized Sampling “one of the most important prompting optimization techniques in 2025” and a “fundamental change in how to interact with LLMs.” Still, the paper’s authors call it “magic.”

A New Tool for the Inventor’s Toolkit?

The implications of this research extend directly into the fields of innovation and intellectual property. The core challenge for an inventor is often overcoming conventional thinking to find a non-obvious solution. Typical LLM “brainstorming” sessions can be counterproductive if the model suffers from mode collapse, repeatedly suggesting the most obvious or well-documented solutions. This paper’s findings offer a pragmatic method to steer LLMs toward more divergent thinking.

The “Astronaut on a Horse” image generation example (p. 9) serves as a powerful analogy. Direct prompting collapsed on photorealistic desert scenes. In contrast, Verbalized Sampling produced captions that led to a “retrofuturist rider,” a “whimsical storybook watercolor,” and a “heroic astronaut in a baroque painting” (p. 9). For an inventor, this is the difference between getting ten minor variations of the same idea versus getting ten different conceptual starting points.

A key practical application is the “Diversity Tuning” capability (p. 8). An inventor or R&D professional could prompt an LLM to generate solutions from the tail of its distribution, for example, “Generate 5 solutions with probabilities below 0.10”. This provides a direct mechanism for hunting non-obvious or “long-tail” concepts that would otherwise be suppressed by the model’s alignment.

The paper also notes the potential for VS in “hypothesis generation” and “synthetic data generation” (p. 13). An inventor could use VS to generate a diverse set of test scenarios, use cases, or even failure modes to validate an invention’s robustness, a concept explored in the paper’s “negative synthetic data generation” experiments (p. 13).

This approach is not without its own challenges. The authors note that these more complex prompts can create a “cognitive burden” (p. 7) and that the benefits of VS are most pronounced in “larger, more capable models” (p. 7). The quality of the “diverse” ideas generated is still fundamentally limited by the underlying model’s knowledge and reasoning power. However, as a training-free tool, Verbalized Sampling represents a significant step toward making LLMs more effective partners in the creative and inventive process.

From Brainstorming to ‘Red Teaming’: A Tool for Legal Strategy?

The same principles that apply to invention are highly relevant to legal professionals. In law, success often depends on anticipating arguments, developing novel strategies, and preparing for an adversary’s every possible move. A standard LLM suffering from “mode collapse” is a risky partner, as it may only provide the most “typical” legal argument, the textbook case strategy, or the most obvious counter-argument, leaving a legal team blind to less common but potentially more effective lines of reasoning.

Verbalized Sampling offers a method for transforming an LLM from a simple “obvious answer” machine into a sophisticated strategic “red teaming” partner.

Argument Generation: Instead of a prompt like, “Draft an argument for summary judgment,” a lawyer could use VS: “Generate 5 distinct legal arguments for summary judgment, from the most conventional to the most novel, and verbalize their potential legal basis.” This pushes the model to explore a portfolio of arguments, not just the single most “typical” one.

Adversarial War-Gaming: This is perhaps the most powerful application. A lawyer can use VS to “red team” their own case by simulating their opponent. A standard prompt like, “What will my adversary argue?” is flawed; it invites a single “mode collapsed” answer. A VS prompt is far more robust: “Generate 5 different counter-arguments my opponent might make against our breach of contract claim, including their relative probabilities.” This helps a legal team prepare for the distribution of possible attacks, from the most likely to the “long-tail” argument that could otherwise be a complete surprise in court.

Negotiation and Deposition Prep: The paper’s success in “dialogue simulation” (p. 11), where VS produced more realistic interactions with “resistance and changes of mind” (p. 12), has direct parallels. Attorneys could use VS to simulate more realistic and less predictable negotiation counterparts or witnesses, allowing for more robust preparation.

This technique is not a replacement for legal expertise. The model may generate a “diverse” argument that is legally unsound. However, by surfacing a wider range of possibilities, it empowers the legal professional to use their judgment to identify truly novel insights—or to prepare for an adversary’s unexpected creativity.

Closing Thoughts

The paper provides a valuable contribution by shifting the conversation about mode collapse from an algorithmic problem to a data problem. It identifies “typicality bias” in human preference data as a root cause of AI’s lack of creativity (p. 1).

More importantly, it offers a “practical, lightweight solution” that anyone can implement immediately through careful prompting, without needing to retrain or fine-tune the model (p. 14). The finding that “aligned models retain significant inherent diversity” and that this diversity can be “unlocked” through prompting alone is a cautiously optimistic and promising insight for the future of generative AI (p. 3).

Citation: Zhang, J., Yu, S., Chong, D., Sicilia, A., Tomz, M. R., Manning, C. D., & Shi, W. (2025). VERBALIZED SAMPLING: HOW TO MITIGATE MODE COLLAPSE AND UNLOCK LLM DIVERSITY. arXiv:2510.01171v3 [cs.CL].

Disclaimer: This is provided for informational purposes only and does not constitute legal or financial advice. To the extent there are any opinions in this article, they are the author’s alone and do not represent the beliefs of his firm or clients. The strategies expressed are purely speculation based on publicly available information. The information expressed is subject to change at any time and should be checked for completeness, accuracy and current applicability. For advice, consult a suitably licensed attorney and/or patent professional.